As we start the New Year, it’s hard to ignore the massive disruption that the automotive industry is currently undergoing. Not only is Affectiva seeing an increasing amount of inbound interest from huge OEMs and Tier-1 suppliers, but the automotive AI software solution we are building will be used to enhance in-cabin sensing to understand occupant experience in the vehicle (including their mood and cognitive load), improve the HMI experience, and build trust. All of which will transform the future of mobility as we know it...which could happen sooner than you might think.

So for the automotive industry specifically, I wanted to share the following 3 trends that I believe are critical as we kick off 2018:

1. People analytics will sit at the center of the auto industry’s disruption

Cars are becoming software platforms on wheels, and, in the future, this notion will become central to the way OEMs market to consumers, and the way consumers buy. As autonomous capabilities advance, consumers will shift away from assessing a vehicle’s driving performance and want to know which OEM can offer them the best in-cab experience while they travel from Point A to Point B. Which car can keep me the safest? The most productive? The most entertained? The most relaxed?

As the automotive industry undergoes massive disruption, incumbent OEMs and Tier 1s are becoming increasingly aware that they need to adopt AI immediately to address not only the external vehicle environment but to understand the in-cabin experience as well. Semi-autonomous and fully autonomous vehicles will require an AI-based computer vision solution to ensure safe driving, seamless handoffs to a human driver, and an enriched travel experience based on the emotional, cognitive and wellness states of the occupants.

2. Solving the “hand-off” challenge will become increasingly important

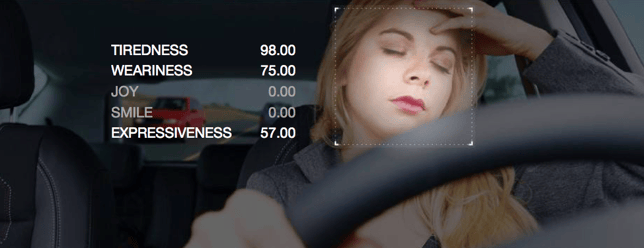

The decision of when a “hand-off” should take place to pass control between an automobile and a driver is a hugely important, life or death, issue for Level 3 and Level 4 vehicles. OEMs must solve this challenge with next-generation intelligent technologies to drive adoption of semi-autonomous vehicles.

Considerations will be rooted in computer vision and multi-modal AI that is measuring a person’s facial expressions, voice, gestures, body language and more, to ensure the driver is awake, alert and engaged. How OEMs solve this problem will likely alter the fate of the industry – do OEMs continue working on this challenge for semi-autonomous vehicles, or do they skip it altogether and focus on driverless vehicles?

3. With the help of Emotion AI, time spent in cars - even stuck in traffic - will be enjoyable and productive

Traveling to work in a car, in a traffic dead lock, can be pretty unenjoyable. With increasingly autonomous capabilities enabled by emotion AI, vehicles will adapt the in-cab experience to be optimized for the occupants’ mood and desires, even transforming a miserable experience like traffic into a productive, even positive, event.

Stress about losing time in traffic will go out the window because people can be just as productive while traveling as they would be at their desk. Peoples’ cars will know them inherently well, and be able to tailor their travel experience based on their needs – whether that means time spent checking work emails, or relaxing with their favorite TV show. And, as the sharing economy tightens its grip on society, OEMs will use adaptive AI to create a personalized, in-cab experience for passengers even when traveling in a foreign car, like an Uber or Lyft.

As we wind down 2017, I have been doing a lot of reflecting about this past year and thinking about what's in store for 2018 and beyond. In fact, a number of my predictions have actually already been published in Xconomy, Forbes, and more.

This month, CES is next week - and we'll be speaking on The Roadmap for Self-Driving Cars panel at 11:30 AM on January 9th. The panel will cover how AI and other technologies are improving self-driving cars and how to safely integrate them on to our roads.

I'm also so pleased that we will feature Bryan Reimer, Ph.D., for our next webcast on Feb. 1st on "The Future of Mobility". Bryan is one of the leading experts of human behavior in automotive environments as a Research Scientist in the MIT AgeLab and the Associate Director of The New England University Transportation Center at MIT. I'm excited about his talk about the importance of monitoring driver state has rapidly expanded, the challenges in doing this, and what are some of the new technologies that will be critically important in autonomous vehicles. So save the date, and I hope you will join us then.

Interested in learning more about the importance of driver state monitoring? Access the Future of Mobility Webcast recording here, “The Future of Mobility: Trends and Opportunities for the Next Generation of Vehicles."