By: Ashley McManus, Marketing Manager; featuring Kelly Antonini, Roboy‘s Collaboration Angel and Rafael Hostettler, Project Manager of Roboy

Human to computer interactions are undergoing a fundamental shift. From Siri on your iPhone to the controls of your car, these advanced AI systems are becoming more conversational and more relational. Robots that are designed to engage with humans need to be social and emotionally intelligent: they must be able to sense human emotions and adapt their actions accordingly in real time.

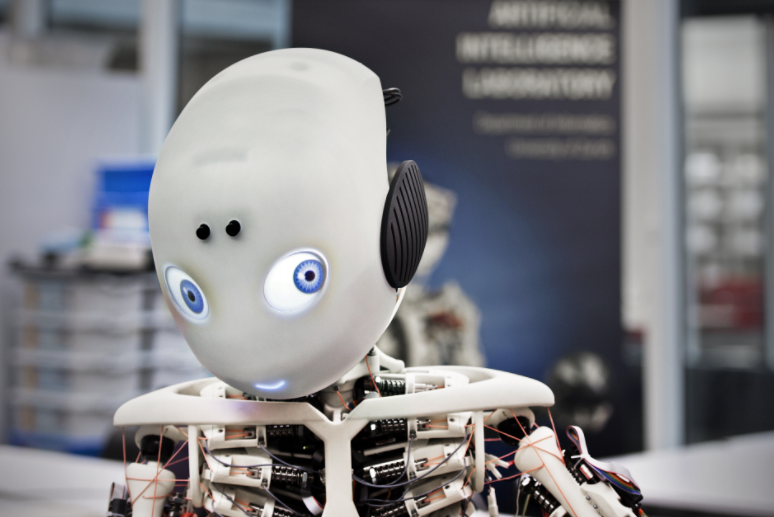

Our emotion AI technology that can infuse companion and service robots with emotional intelligence, making them truly social, empathic and more effective - bringing them to life. Roboy, an Affectiva SDK user, was designed with this in mind - and is probably the most human robot in the world. Roboy recognizes people’s emotions, and improves conversational interactions with humans by bringing engagement and understanding. Roboy has had extensive media coverage - and in three years has attended 70 events worldwide. For further information, visit roboy.org or check out their Facebook page.

We interviewed Kelly Antonini, Roboy‘s Collaboration Angel and Rafael Hostettler, Project Manager of Roboy, on how the bot works, the process of developing it, and what they think is the future of social robotics.

What’s the pitch for Roboy?

What’s the pitch for Roboy?

We create a platform for anthropomimetic robot development that unites students, researchers, companies and artists from a broad spectrum of disciplines. We strive to create an open-minded community that boldly co-evolves the hard- and software of the robots it builds. In pursuit of embodiment of our vision, we venture into unexplored technological spaces. To ensure unrestricted development we keep all results open source and provide value to the stakeholders through the aggregated experience in the platform. We actively influence the public perception of robotics to level the cultural ground for our innovation through public displays, arts and public speeches

What does Roboy do and how does he work?

Roboy has a human-like skeleton, muscle structure, and incredibly cute looks. The goal of the Roboy project is to advance humanoid robotics to the state where robots are as capable as human bodies (as fast, versatile, robust, flexible, ...). He is the first prototype in a genealogy of Roboys. Also, he is a soft robot research platform for studying the coordination of muscles, a messenger of a new generation of robots that could share our living space with us, and the first anthropomimetic robot in the Human Brain Project (HBP).

Currently, he is being developed by an interdisciplinary enthusiast society at the Technical University of Munich, Germany, comprised of engineers, managers, and artists - students and professionals alike.

Where did you get the idea to build him?

Roboy was born in the artificial intelligence laboratory of Prof. Rolf Pfeifer in Zurich, Switzerland. After more than 30 years of research into embodied robotics, Roboy was to be an ambassador for this kind of robotics. It was presented at the robots on tour event, where the laboratory celebrated its 25th anniversary. He was given 48 motors and a 3D printed skeletal structure, and created with an anthropomimetic, compliant and tendon-driven design. Roboy was built with the support of private companies, universities and many helpful hands.

What was your process in building him?

Roboy’s development milestones were:

|

June 2012 |

Kick off project |

|

August 2012 |

First CAD Draft |

|

September 2012 |

First parts 3D printed |

|

October 2012 |

Begin Assembly Torso |

|

End November 2012 |

First Movement in the Torso |

|

January 2013 |

Delivery of Legs |

|

Feburary 2013 |

Delivery of Head from Sedax |

|

Mid February 2013 |

Final Assembly |

|

March 8th 2013 |

Unveiling Roboy at ROBOTS ON TOUR in Zurich, Switzerland |

How did you arrive at Affectiva's technology to help achieve your vision?

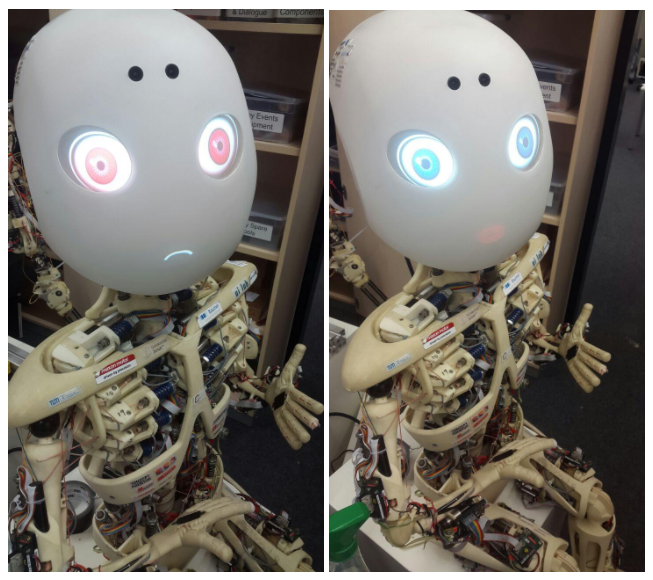

Affectiva was one of our sponsors for Hack Roboy, the very first Roboy hackathon. One of the winning teams at the hackathon used Affectiva’s SDK to build an interactive game with Roboy. We use Affectiva’s SDK to detect the emotions of the people Roboy sees. Roboy then reacts to these emotions, as shown in the images below:

Which features of Roboy are you most excited about?

Roboy is the most human and likely the cutest robot in the world, and we are very excited about both of these facts. While most robots in the world have motors in the joints, roboy uses muscles and tendons as humans do. This not only makes his motions very smooth, natural, and safe to interact with, but also makes him the perfect robot for the Human Brain Project. Because when you test theories about how the brain controls a body, you need a body that works like a human body.

Also, being so very cute, everyone likes Roboy. Therefore Roboy goes to events, talks to people, and is an ambassador for a new kind of robotics. And we, and everyone, loves him for that.

What is the next step for your robot?

Mobility, communication and Virtual Reality (VR) are the ongoing fields of improvement that the team is working on.

Roboy has been bound to sit on his custom-built box for a long while. The “Stand Up!” team is currently investigating new control strategies on human sized legs by applying state of the art machine learning techniques. In parallel, smaller muscle units to fit in smaller and more robust legs are being developed.

By extending Roboy‘s set of cognitive skills, the “Hi There!” team is enabling Roboy to independently communicate with people. By empowering him to detect human presence, recognise people and facial expressions, Roboy will become a fluent speaker and a great listener.

The “Virtual Roboy” team is working hard to bring Roboy to Virtual Reality (VR) and emulate his sensual inputs, such as vision and hearing. Today’s virtual reality technology will enable anyone to feel like Roboy feels and allow us to simulate Roboy’s behaviour at any time.

Are there any plans to build another robot like this in the future?

Within the next 12 months, a new Roboy will be developed. Roboy Junior 2.0 will be better, smarter, and more humanlike, we will work hard to make it as conversational as possible and maybe, he will even walk.

Do you have any other advice for those looking to build similar social robots of their own?

It’s important to understand the psychology of robotics. One of the reasons why Roboy is designed to be so cute and likeable, is that it lowers expectations geared towards him in interaction – people simply are more willing to excuse lapses and accept shortcomings.

When interacting with humans, we follow certain social rules and behaviours, and adhering to them makes interaction efficient. The big challenge for robotics is to live up to these expectations and social cues. E.g. for Roboy we found that the biggest limiting factor was the time required to detect when a human was done talking. This limited the time we needed to reply, making the interaction slow and unnatural.

How can someone try Roboy? Where can they get him?

Roboy has had extensive media coverage and in three years attended 70 events worldwide. You can book to Roboy to attend one of your events, or visit him when he is on display at an event near you. Roboy’s mechanical development and software are completely open source. All expertise, ideas and inventions do not belong to one specific entity, and everyone has the chance to advance Roboy’s technology

In your opinion, what obstacles or milestones do social robots need to overcome / achieve in order to be more mainstream?

First and foremost, the robots need to actually provide useful value to the users. In the end, social robots are interfaces to information and as such are in competition with alternative approaches, such as touch screens, mobile phones, etc. Up until now, there are very few good use cases that excel over more traditional approaches. To a large extent, this is also because natural language understanding has still a long way to go. While speech recognition has made tremendous progress recently, turning that text information into contextual understanding and processing it is still a largely unsolved problem. Thus, whenever a social robot does not know how to map information from the user, the illusion of an intelligent agent breaks apart and the robot must resort to more traditional ways of interaction, e.g. through a touch screen.

Secondly, while speech recognition made tremendous progress, detecting the beginning and ending of an utterance as well as delays in the processing (e.g. simple round-trip time for the data when it is done online), make the interaction sluggish. Also, with humans we are used to interrupt ongoing speech if we reason that the information following is not what we hope for.

Furthermore, if we didn’t grasp the full message of our partner, we use complex asking patterns to reconstruct partial information, something which still is hard to model for dialogue systems.

Lastly, the limited scope of knowledge makes it hard for humans to detect and model what the virtual agent actually offers in functionality. A mismatch in expectations immediately leads to frustration, which is hard to recover from. In contrast, touch interfaces allow to present all possible options to the user in a simple way, and it is easy to discover what information is available and what not. When working with a social robot, the lack of understanding by the robot might however be rooted in several causes, making it hard for the user to know what is technical and what is contextual limitation. The users often then try to adapt to the system by changing the way questions are asked, rendering the interaction inefficient and frustrating.

About Rafael Hostettler

About Rafael Hostettler

Being fascinated by the complexity and beauty of everything, Rafael Hostettler always had a hard time to limit himself to a single thing. That's why he has an MSc in Computational Science from ETH Zurich, where he learnt to simulate just about everything on computers, so he didn't have to. Now he's building robots that imitate the building principles of the human musculoskeletal system and travels the world with Roboy. The 3D printed robot boy that plays in a theatre, goes to school and captivates the audience with his fascinating stories. He also co‑founded General Interfaces GmbH that builds things so intuitive to use, you don't even need to learn them and Medical Templates AG that applies that principle to interventional radiology. Ultimately, all his doings converge towards a single goal: become a robot, to stay curious forever. If you have any further questions or proposals, please contact him at rh@roboy.org.

About Kelly Antonini

About Kelly Antonini

Recent graduate from the Technical University of Munich, Germany, with a MSc degree in Earth Oriented Space Science and Technology (ESPACE), Kelly Antonini is “Roboy’s collaboration angel”. In charge of managing collaborations, and coordinating Roboy’s activities. You can reach her at business@roboy.org.

About Roboy:

About Roboy:

Roboy is a humanoid robot, currently being developed at the Technical University of Munich, Germany. He is a soft robot, with a human-like skeleton and a muscle structure. In the near future Roboy will be able to walk and be conversational.

Working on Robots is very expensive, especially when it comes to the hardware and electronics. If you would like to support the further development of Roboy and help him become an integral part of the next generation of humanoid robots, please take a look at our Patreon account: patreon.com/Roboy

Follow Roboy on Twitter: @RoboyJunior