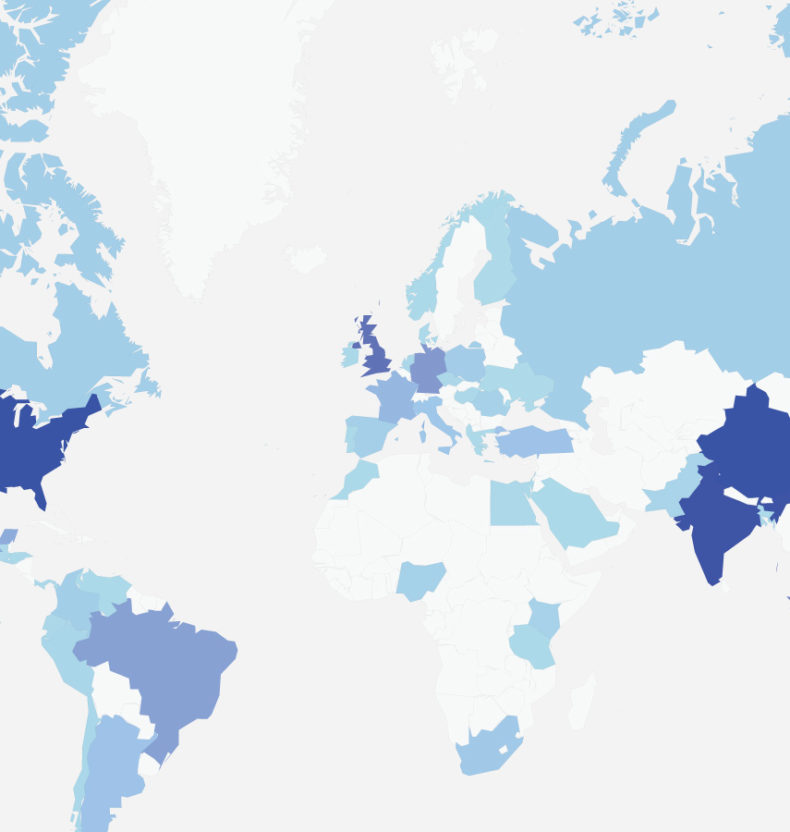

How do people smile? Are typical smiles short and fleeting, long and sustained, or something in between? This paper by researchers at Affectiva shares some insights through an analysis of facial expression data observed from 1.5 million face videos collected from 90 countries.

Abstract

Facial behavior contains rich non-verbal information. However, to date studies have typically been limited to the analysis of a few hundred or thousand video sequences. We present the first-ever ultra large-scale clustering of facial events extracted from over 1.5 million facial videos collected while individuals from over 94 countries respond to one of more that 8000 online videos. We believe this is the first example of what might be described “big data” analysis in facial expression research. Automated facial coding was used to quantify eyebrow raise (AU2), eyebrow lowerer (AU4) and smile behaviors in the 700,000,000+ frames. Facial “events” were extracted and defined by a set of temporal features and then clustered using the k-means clustering algorithm. Verifying the observations in each cluster against human-coded data we were able to identify reliable clusters of facial events with different dynamics (e.g. fleeting vs. sustained and rapid offset vs. slow offset smiles). These events provide a way of summarizing behaviors that occur without prescribing the properties. We examined the how these nuanced facial events were tied to consumer behavior. We found that smile events – particularly those with high peaks – were much more likely to occur during viral ads. This data is cross-cultural, we also examine the prevalence of different events across regions of the globe.