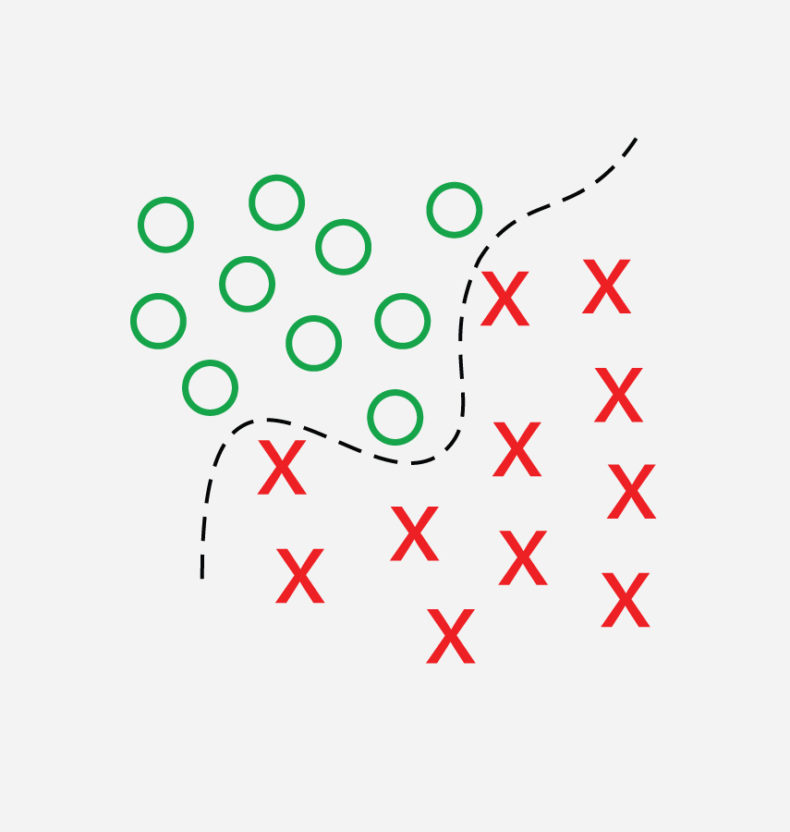

Affectiva’s technology makes use of machine learning, an area of research in which algorithms learn from examples (training data). Rather than building specific rules that identify when a person is making a specific expression, we instead focus our attention on building intelligent algorithms that can be trained (learn) to recognize expressions.

At the core of machine learning are two primary components:

- data: like any learning task, machines learn through examples, and can learn better when they have access to massive amounts of data,

- algorithms: how machines extract, condense and use information learned from examples.

In our paper 2015 paper “Facial action unit detection using active learning and an efficient non-linear kernel approximation” published at the International Conference on Computer Vision Workshops, we describe one algorithmic approach employed by Affectiva for the task of facial expression recognition.

In addition to exploring different types of Support Vector Machines (SVMs) we examine the amount of data needed to train discriminative classifiers we also explore the tradeoff between computational complexity of a model and the accuracy. While some methods can yield high accuracy, it typically comes as a tradeoff with speed. Ultimately the selection of the best model to use will depend on where the model is deployed and the restrictions of that environment. One model might be suitable for running on a cloud server for batch processing and driving our cloud-based APIs, while another model is better suited for being included in our mobile SDK for mobile on-device processing.

Abstract

This paper presents large-scale naturalistic and spontaneous facial expression classification on uncontrolled webcam data. We describe an active learning approach that helped us efficiently acquire and hand-label hundreds of thousands of non-neutral spontaneous and natural expressions from thousands of different individuals. With the increased numbers of training samples a classic RBF SVM classifier, widely used in facial expression recognition, starts to become computationally limiting for training and real-time performance. We propose combining two techniques: 1) smart selection of a subset of the training data and 2) the Nystr¨om kernel approximation method to train a classifier that performs at high-speed (300fps). We compare performance (accuracy and classification time) with respect to the size of the training dataset and the SVM kernel, using either an RBF kernel, a linear kernel or the Nystr¨om approximation method. We present facial action unit classifiers that perform extremely well on spontaneous and naturalistic webcam videos from around the world recorded over the Internet. When evaluated on a large public dataset (AM-FED) our method performed better than the previously published baseline. Our approach generalizes to many problems that exhibit large individual variability.