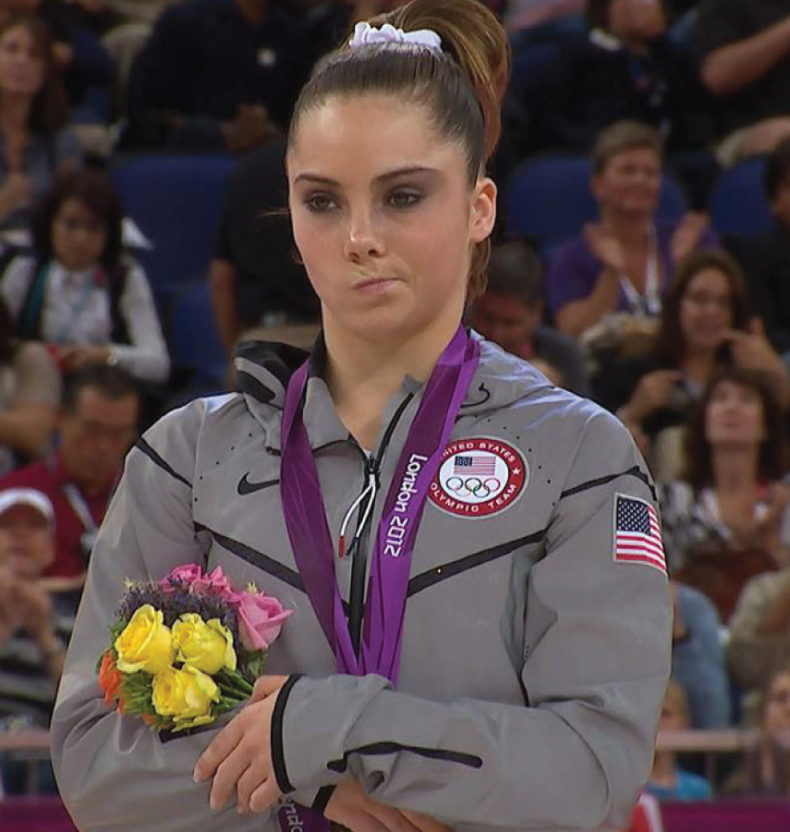

Many expressions that are detected by automated facial expression recognition are symmetric, however some expressions are asymmetric, where the intensity of the expression is stronger on one side of the face than the other. A prime example of an asymmetric expression is the smirk. This paper, published at the 2013 IEEE Automatic Face and Gesture Recognition conference (FG 2013), outlines one approach developed by Affectiva’s research team to improve the detection accuracy of asymmetric expressions.

Abstract

Asymmetric facial expressions, such as a smirk, are strong emotional signals indicating valence as well as discrete emotion states such as contempt, doubt and defiance. Yet, the automated detection of asymmetric facial action units has been largely ignored to date. We present the first automated system for detecting spontaneous asymmetric lip movements as people watched online video commercials. Many of these expressions were subtle, fleeting and co-occurred with head movements. For each frame of the video, the face is located, cropped, scaled and flipped around the vertical axis. Both the normalized and flipped versions of the face feed a right hemiface trained (RHT) classifier. The difference between both outputs indicates the presence of asymmetric facial actions on a framebasis. The system was tested on over 500 facial videos that were crowdsourced over the Internet, with an overall 2AFC score of 88.2% on spontaneous videos. A dynamic model based on template matching is then used to identify asymmetric events that have a clear onset and offset. The event detector reduced the false alarm rate due to tracking inaccuracies, head movement, eating and non-uniform lighting. For an event that happens once every 20 videos, we are able to detect half of the occurrences with a false alarm rate of 1 event every 85 videos. We demonstrate the application of this work to measuring viewer affective responses to video content.