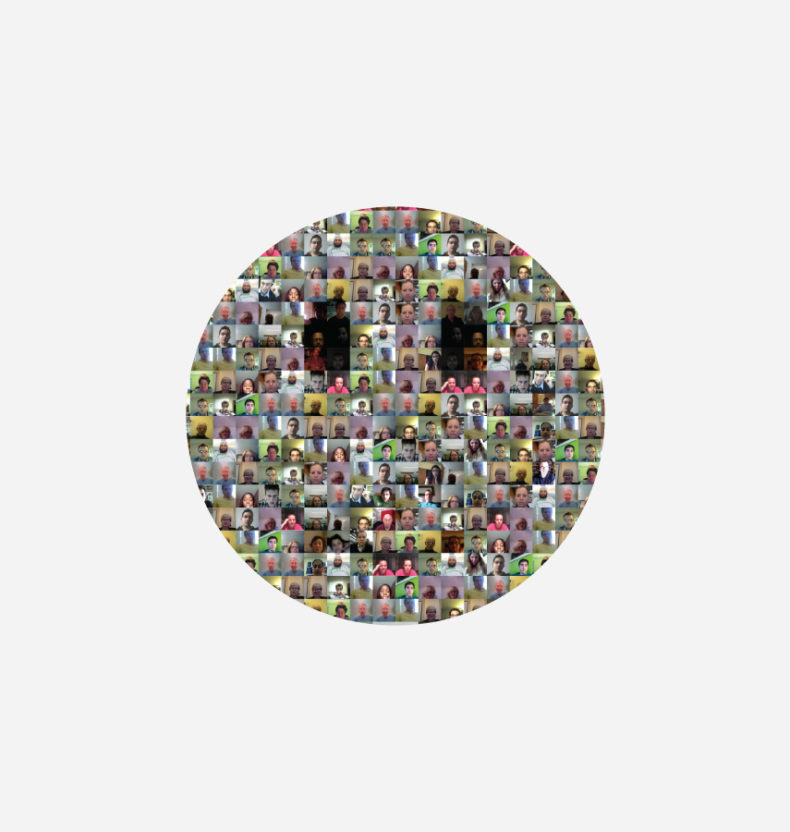

The ability to collect biometric emotional data, such as facial expressions, at scale is a powerful tool. In this paper, published in IEEE Affective Computing and Intelligent Interaction 2015 (ACII 2015), Affectiva’s web-based platform for the collection of facial expression data is outlined. This same framework was used in a web-site promoted by forbes.com to crowdsource facial response data that ultimately formed the Affectiva-MIT Facial Expression Dataset (AM-FED). Results from the success of the crowdsourcing comparing, including data distributions and an overview of the results, are presented.

Abstract

We present results validating a novel framework for collecting and analyzing facial responses to media content over the Internet. This system allowed 3,268 trackable face videos to be collected and analyzed in under two months. We characterize the data and present analysis of the smile responses of viewers to three commercials. We compare statistics from this corpus to those from the Cohn-Kanade+ (CK+) and MMI databases and show that distributions of position, scale, pose, movement and luminance of the facial region are significantly different from those represented in these traditionally used datasets. Next we analyze the intensity and dynamics of smile responses, and show that there are significantly different facial responses from subgroups who report liking the commercials compared to those that report not liking the commercials. Similarly, we unveil significant differences between groups who were previously familiar with a commercial and those that were not and propose a link to virality. Finally, we present relationships between head movement and facial behavior that were observed within the data. The framework, data collected and analysis demonstrate an ecologically valid method for unobtrusive evaluation of facial responses to media content that is robust to challenging real-world conditions and requires no explicit recruitment or compensation of participants.